Microprocessors vs. Microcontrollers vs. SoCs: All You Need to Know

What does the word “processor” mean, nowadays? People use it to talk about a bewildering array of microprocessor technologies, from humble 8-pin microcontrollers to today’s massively integrated, multi-die packages that keep pushing the boundaries of the smartphone revolution.

Once upon a time there were CPUs. And then there were microprocessors (MPUs), quickly followed by microcontrollers (MCUs). And finally there came the system-on-chip (SoC). With so much integration, the lines can start to blur.

In many online articles there’s definitely some confusion: these terms are bandied around with such abandon. In this article I’ll examine the differences in a practical way that relates to current technology.

But first, some history…

So What Actually is a CPU?

In computing classes we all learn that at the heart of every computer there is a central processing unit, or CPU.

The CPU runs the machine code program, the actual binary instructions stored in memory. It performs the arithmetic and logic operations, flow control, and is responsible for fetching instructions and data to and from memory. It has no built-in ability to communicate with the outside world, and relies on external peripheral hardware to do that.

Its name dates back to the time when the CPU was an entire refrigerator-sized cabinet in a computer centre. That’s why it was called a central processing unit, because there were lots of other processing units around it, handling memory, I/O, storage and so on.

But by the late 1960s things were changing fast. The computer of the day was called a minicomputer. It was only “mini” in comparison to the colossal mainframes that it superseded.

Over the course of the late 1960s, the CPU shrunk dramatically until it was a single circuit board like a motherboard in a modern desktop PC, but literally stuffed with early logic chips.

And as miniaturisation accelerated, more and more logic got crammed onto each chip and the number of chips grew smaller and smaller, until it reached one.

A Brief History of the Microprocessor

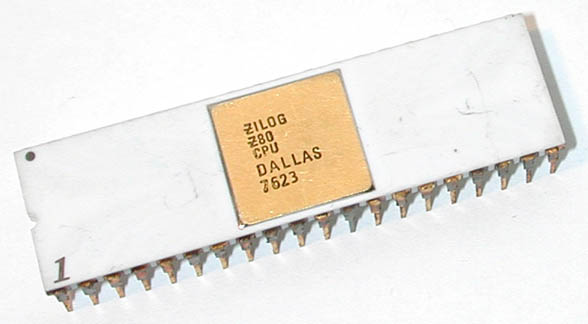

In 1971, after trying for some years, Intel was the first company to manage to squeeze an entire CPU onto a single piece of silicon called a microprocessor (or MPU).

The 4004 microprocessor was a mere 4-bits wide and only really suitable for the kind of binary-coded decimal (BCD) arithmetic used in calculators. But it was still a huge milestone. A year later Intel doubled the bus size and released the first 8-bit microprocessor, the 8008, which was used in low-power devices such as terminals. And in 1974 they released the 8800. This could be used as the CPU in a proper computer, and the microcomputer was born in the form of the Altair 8800.

Over the next few years, other companies released their own competing chips such as the Motorola 6800 and the highly successful MOS Technology 6502. And Intel’s 8800 inspired the successful Zilog Z80, but also spawned a lineage leading though the 8086 to the 80×86 family, on which an entire personal computer industry was built.

What all these microprocessors had in common was that they remained just CPUs. They needed a considerable amount of external hardware to make them do anything useful. Even a simple 8-bit MCU needs as a bare minimum: a clock circuit, some decoding logic to access the memory, some actual memory chips, and some form of I/O port in order to actually get information in and out of the CPU.

Which brings us to today. In your modern laptop or desktop computer, the CPU takes the form of a microprocessor, more commonly just referred to as a processor, which is a chunk of silicon a few square centimetres in area. But it’s still a central processing unit: it sits on a motherboard and is surrounded by other hardware including memory, bus logic, storage and other I/O devices.

Other than the complexity and speed of the system, and the number of CPU “cores” on the silicon, this architecture has stayed roughly the same for personal computers since the 1970s.

What About My Fridge? Introducing Embedded Systems

You are probably aware that your microwave and washing machine also have processors inside them.

Sure, they have a lot less computing power. They run a built-in, hard-coded program that just cooks your food or washes your clothes. The washing machine never has to cook, and the microwave never has to wash. And neither of them ever has to run first-person shooter games or edit photos (although there’s no accounting for what the future might bring!).

This kind of system is generally referred to an embedded system because the processor is embedded inside an appliance or machine that performs a specialised task that might not be an obvious computing task. It may not even be evident that the machine has any kind of processor at all, as is the case with something like a toaster.

Embedded systems are everywhere. From the thermostats to washing machines, to cars, passenger airliners and satellites, they are literally all around us all the time. It’s an an enormous global market.

Some embedded systems, particularly the main control systems in airliners for instance, are extremely powerful and are basically embedded computers. But those systems usually have smaller, “slave” systems around to them that read sensors and move mechanical parts.

The Dawn of the Microcontroller

The vast majority of all embedded systems require relatively little computing power. Because they’re less complex, their CPUs can take up less space on the silicon and use less power. For that reason, manufacturers were able to build specialised chips that included a smaller CPU alongside some memory and lots of basic I/O functions. This reduced the amount of additional electronics needed, thereby reducing the size, power consumption and cost. They can be mass-produced very cheaply in their millions.

These specialised chips are known as microcontrollers, or MCUs.

The first microcontroller was produced by Texas Instruments and used internally in its calculators in the early 1970s. By 1976, Intel had adapted its own microprocessors to produce its first microcontroller, the 8048, which was used in IBM keyboards. A few years later Intel released the immensely successful 8051, which is still sold to this day!

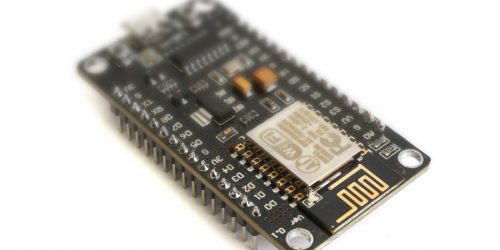

Other common MCUs today include Atmel’s AVR range and the Microchip PIC (now also owned by Atmel). Plenty of other manufacturers including Cypress, Analog Devices, Freescale (formerly Motorola), Intel and National Semiconductor produce microcontrollers to meet the needs of just about any system architect.

Indeed, some of the earliest microprocessor designs have evolved into microcontroller chips. The popular 68HC05 is a direct descendant of the original Motorola 6800 microprocessor.

The power and abilities of MCUs varies hugely. The simplest are 8-bit devices that can measure only a few square millimetres, and the largest can be 32-bit cores with hundreds of pins. Their typical clock speeds range from 1MHz up to several hundred. They usually incorporate on-board RAM, from a few hundred bytes to 1MB in extreme cases, but the median is around 16KB. Flash storage is generally higher, from a few kilobytes to several megabytes.

What MCUs lack in memory and processing power, they make up for by providing a range of I/O signals that make them ideal for interfacing directly with peripheral hardware, including driving motors, servos and actuators, and reading analog signals. A typical, mid-range microcontroller will boast a couple of dozen general-purpose I/O lines and several serial ports, both synchronous (such as I2C and SPI) and asynchronous (like RS232). They normally incorporate analog-to-digital converters (ADCs) and digital-to-analog converters (DACs), and pulse-width modulation (PWM) controllers. All MCUs have at least one on-board timer, and often they’ll have special hardware functions for timing and generating external signals.

A microcontroller will generally run a dedicated program to perform a specific task. This mode of operation is referred to as “bare metal,” because the code is run with no OS and talks directly to the hardware.

For more complex systems, specialist operating systems do exist that can run on some higher-end MCUs. These are called RTOSes (Real Time Operating Systems). Common RTOSes include FreeRTOS and VxWorks, but there are a lot more out there.

The Incredible Shrinking Computer: the Birth of the System-on-Chip

During the 1990s, silicon vendors started cramming more and more functionality onto their chips. This was largely driven by the expanding mobile phone industry.

In the early days of GSM, a 2G handset contained maybe a dozen chips, giving rise to famously bulky handsets. Manufacturers had a strong incentive to make them smaller, lighter and less power-hungry. But cramming all those chips onto a single silicon die was a slow process.

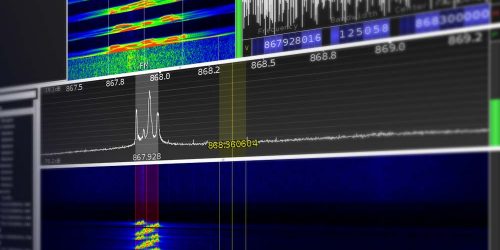

There was a primary CPU to handle the user interface and the upper layers of the GSM protocol stack. This needed quite a lot of RAM, and even more Flash memory for the bespoke operating system, application and protocol code. On top of that was a separate so-called baseband processor in the form of a digital signal processor (DSP), to handle the physical layer of the GSM protocol, including complex, mathematically-intensive operations such as channel coding and speech coding. The baseband also needed its own memory. Then there was the mixed-signal chip, which incorporated the lowest level of modem and radio-frequency (RF) functions.

Over the course of around a decade, right up until the advent of smartphones, all these functions were compressed into a couple of chips. And at the same time handset makers were adding more features. Phones suddenly had to support bluetooth. Then someone decided they all needed cameras. And with each additional piece of hardware, the application code and by extension the operating system became more complex.

The UK chip designer ARM was instrumental in this process. They licensed their processor design which featured highly advanced power management which was crucial to the mobile phone market. By the mid-2000s, many manufacturers were combining an ARM CPU core with a DSP from companies such as Analog Devices and Texas Instruments. In fact, these early SoCs were often designed by third party companies and sold as fabless designs to handset manufacturers. Companies such as Qualcomm, Broadcom and Mediatek still operate in this space.

Getting Down With the Kids: The Smartphone Era

And then in 2007 came the iPhone, which was to be game-changer for the SoC market. But it wasn’t straight away.

At the time, the sheer amount of hardware and software crammed into the original iPhone was simply unheard of. The chips inside included:-

- 32-bit single-core ARM CPU/GPU hybrid called the APL0098 combined with 512MB SDRAM, fabricated by Samsung

- Infineon PMB8876 S-Gold 2 baseband and multimedia processor (which is itself a complete ARM+DSP-based SoC)

- Infineon M1817A11 GSM RF transceiver

- CSR BlueCore4-ROM WLCSP bluetooth modem

- Skyworks RF power amplifier

- Marvell 802.11 WiFi interface

- Wolfsson audio processor

- Broadcom BCM5973A touchscreen processor

- Intel memory chip (32MB Flash NOR plus 16MB SRAM)

- Samsung 4/8/16GB NAND Flash ROM for disk storage

- a 2MP camera

This was an incredible achievement, but the number of chips was almost a reminder of early GSM handsets, and it’s hardly a surprise that almost immediately Apple started to try and cram as much of this functionality as possible into as few chips as it could.

Over the next decade, successive generations of iPhone, iPad and competing Android devices pushed the SoC ever-further.

By the time of the iPhone X, the primary SoC (called the A11 Bionic) included:-

- a 6-core 64-bit ARM CPU, 2 of which can run at 2.33 GHz

- a 3-core GPU, used for machine learning tasks as well as graphics

- image processor featuring “computational photography” features (e.g. facial recognition)

- a dedicated neural network engine for machine learning applications

- a motion coprocessor

- an ARM-based “secure enclave” security coprocessor

- 3GB of SDRAM.

The primary Apple A11 SoC is way more advanced than the APL0098 in the original iPhone. There is a huge amount of image processing, machine learning and multimedia processing going on inside the A11.

There’s so much silicon real estate in there that it’s fabricated using “package-on-package” technology which involves stacking multiple silicon dies inside the same package.

And there are still a lot of external chips, including:-

- Qualcomm Snapdragon 1Gb/s LTE baseband (another complete multicore ARM+DSP-based SoC)

- Toshiba TSB3234X68354TWNA1 64 GB Flash

- Proprietary Apple audio codec

- NXP NFC module

- Various power management and amplifier parts

- 12MP camera

- 7MP camera

As you can see, Apple’s goal with its SoC’s has been to pack as much heavy-duty processing as possible into a single package. Its chips are no doubt very expensive to produce, but like all trailblazing technology it has handy side effects for the rest of the industry. This level of integration has led the way for much cheaper SoCs.

The Raspberry Pi: A SoC for the Masses!

The Raspberry Pi (RPi) is a very low-cost, single-board computer (SBC) developed by the Raspberry Pi Foundation, a charity based in Cambridge, UK. It was first released in 2012. The stated purpose of the Foundation is to promote education in computing around the world, which is the primary reason the RPi is so cheap. The initial version sold for $35 but there have been versions released that cost as little as $5.

What differentiates the RPi from apparently similar devices such as the Arduino platform is its computational power. The Arduino is aimed at almost the same market, looks similar and retails in the same price range, but the Arduino is microcontroller-based.

The RPi runs full-blown desktop operating systems and displays them on a monitor via an HDMI cable. It can actually be used as a low-cost desktop machine.

The most recent version of the Raspberry Pi, on the other hand, packs a 4-core ARM Cortex CPU running at 1.4GHz, a 300-400MHz GPU with integrated HDMI output, 1GB of RAM, ethernet, wifi, bluetooth, 2 USB ports, video camera input and audio I/O plus most of the low-level I/O functions of the larger Arduino boards!

It’s probably safe to say the Raspberry Pi could not have been built without the existence of very cheap SoCs. Almost everything in the RPi is integrated into a Broadcom SoC, with the exception of the networking devices.

And it has spawned a number of similar devices, a trend that will no doubt continue, giving developers and hobbyists a superb range of platforms to choose from.

Conclusion

This article discussed the evolution of the microprocessor, from a replacement for the large CPU cabinets of old to the bewildering array of modern “processors” found in everything from toasters to smartphones.

The first microprocessor was invented in 1971 and within a few years dozens of others were on the market. Manufacturers pushed in two directions at once: to increase the processing power for the burgeoning PC market, and to reduce the chip’s footprint, power consumption and cost so they could be embedded into everyday appliances. This latter direction soon gave rise to the microcontroller or MCU.

MCUs were the staple for embedded systems for many years, and remain so for a lot of applications, but they were not powerful to run advanced applications, and a new level of integration began in the 1990s as the cellphone industry began to take off.

Integrating digital signal processing (DSP) cores alongside regular CPU cores and increased RAM and flash ROM was the goal of many silicon and fabless vendors in the early 2000s, and this gave rise to the modern system-on-chip (SoC).

Mass production and extreme integration by innovative companies such as Apple helped reduce the cost of earlier generations of SoC so devices like the Raspberry Pi could utilise this technology and offer it cheaply to everyone.